Face2Statistics: User-Friendly, Low-Cost and Effective Alternative to In-Vehicle Sensors/Monitors for Drivers

Zeyu Xiong, Jiahao Wang, Wangkai Jin, Junyu Liu, Yicun Duan, Zilin Song, and Xiangjun Peng

Published in HCI 2022

Human-Computer-Interaction

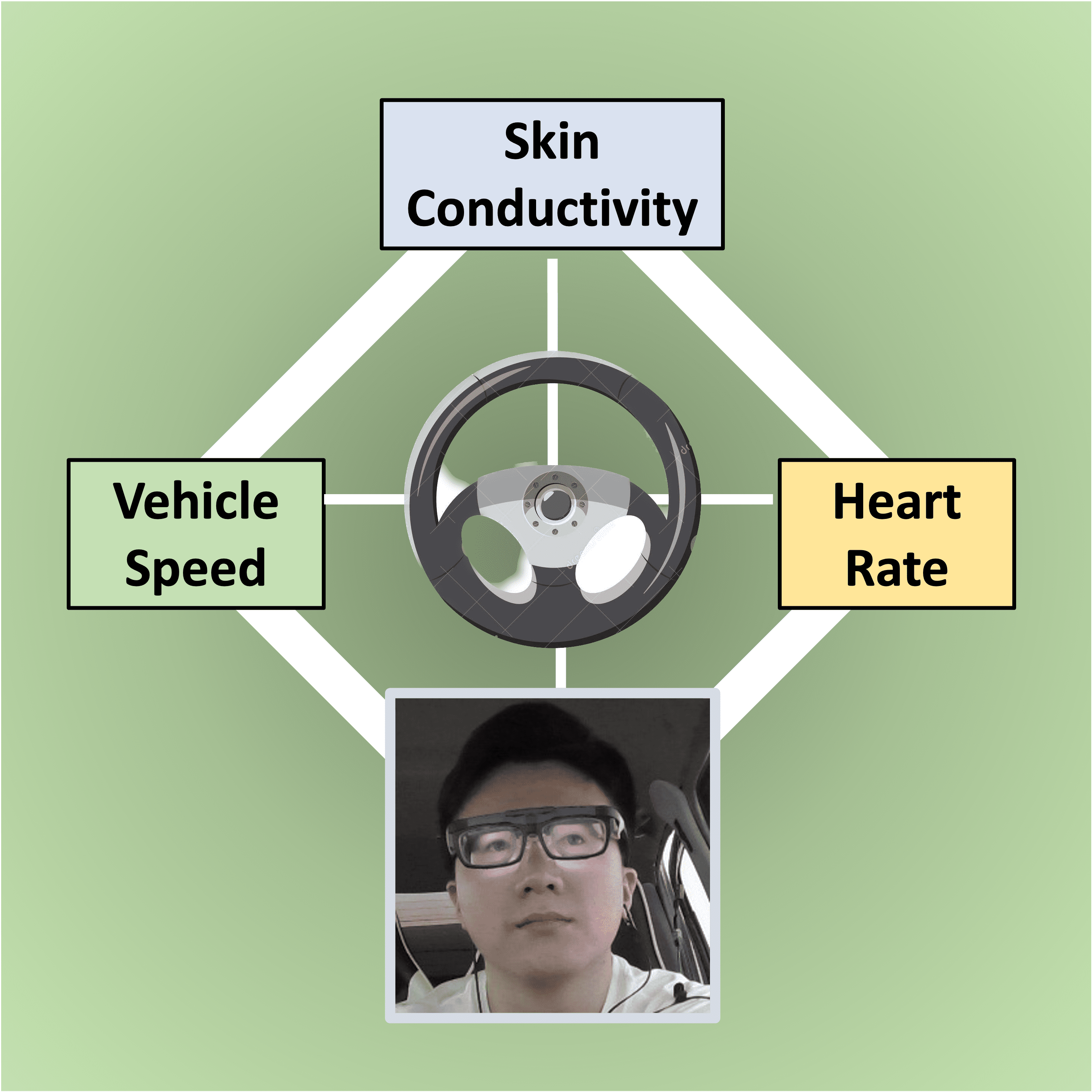

Motivation

A comprehensive roadmap to deliver user-friendly, low-cost and effective alternatives for extracting drivers’ statistics.

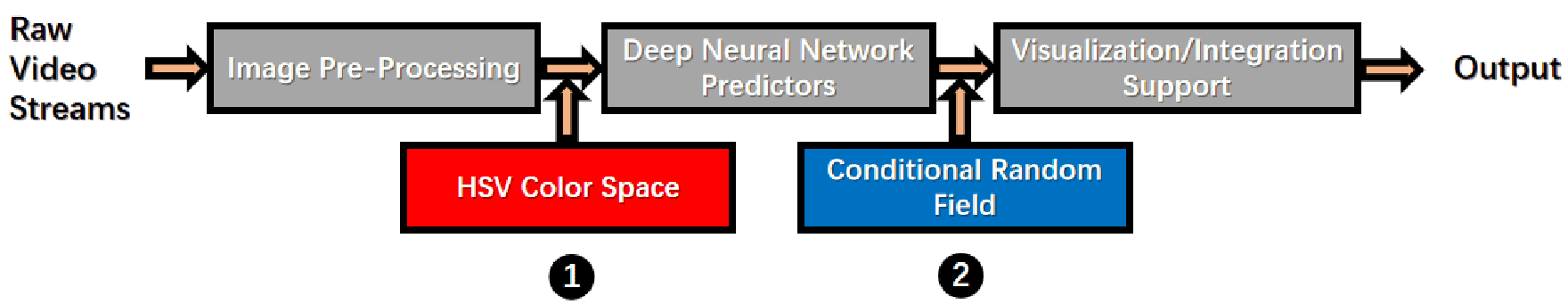

Overall Design

Input

Raw video streams (facial expressions contains many noisy pixels)

Step 1

Pre-processing input images to retrieve only the facial expressions and enhance the performance of Face2Statistics

Step 2

Exploring different deep neural network-driven predictors

First Attempt: Convolution Neural Networks (CNNs)

Second Attempt: Long Short-Term Memory (LSTM) Recurrent Neural Network (RNN)

Third Attempt: Bidirectional Long-Short-Term Memory (BiLSTM) Recurrent Neural Network (RNN)

Step 3

Visualizing predicted results

Key Design Choices

(1) We utilize HSV color space instead of RGB color space to reduce the variance of illumination among different pixels.

(2) We apply personalized parameters via pearson correlation coefficients to conditional random field to realize customizing.

Experiment Results

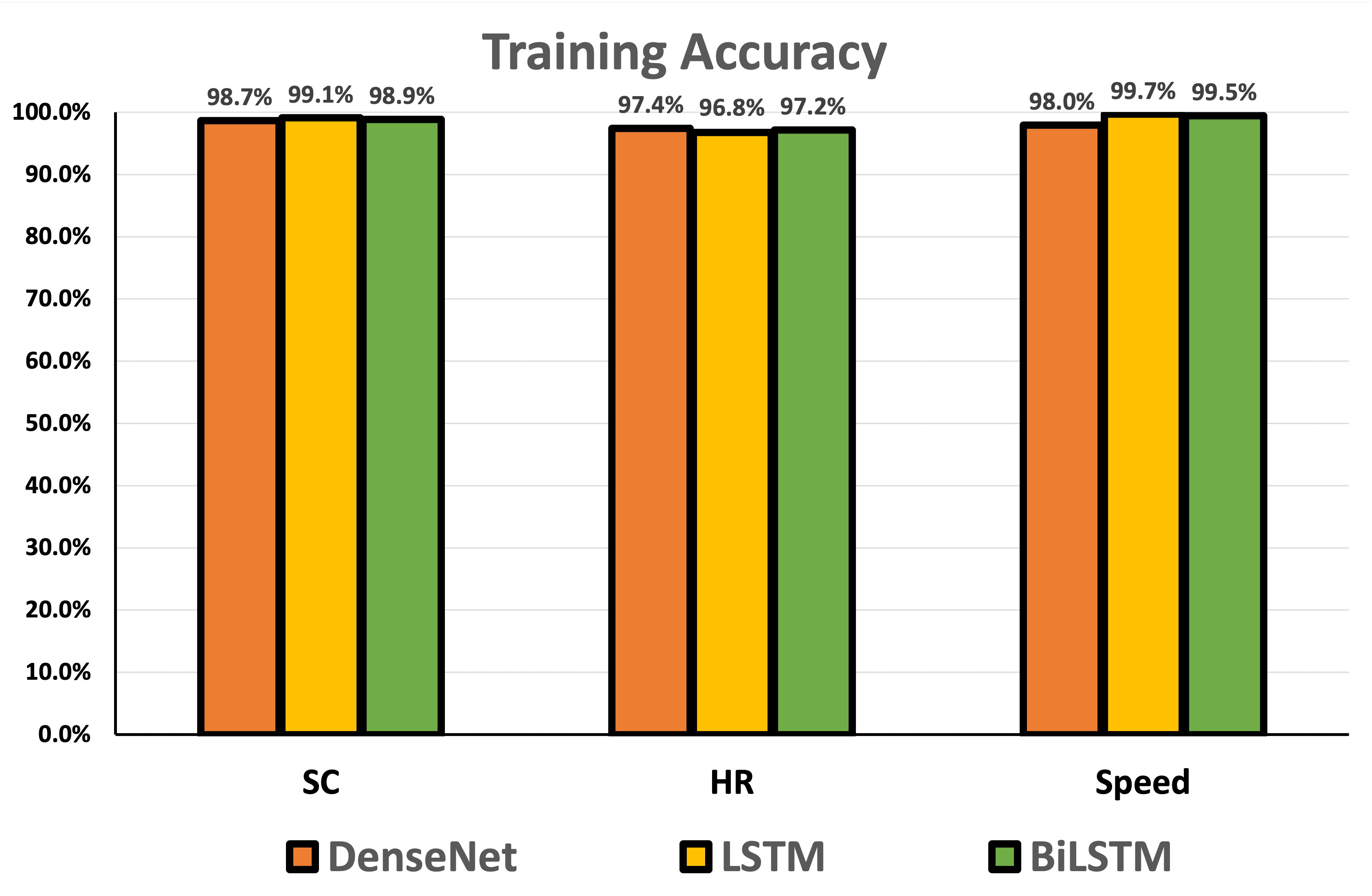

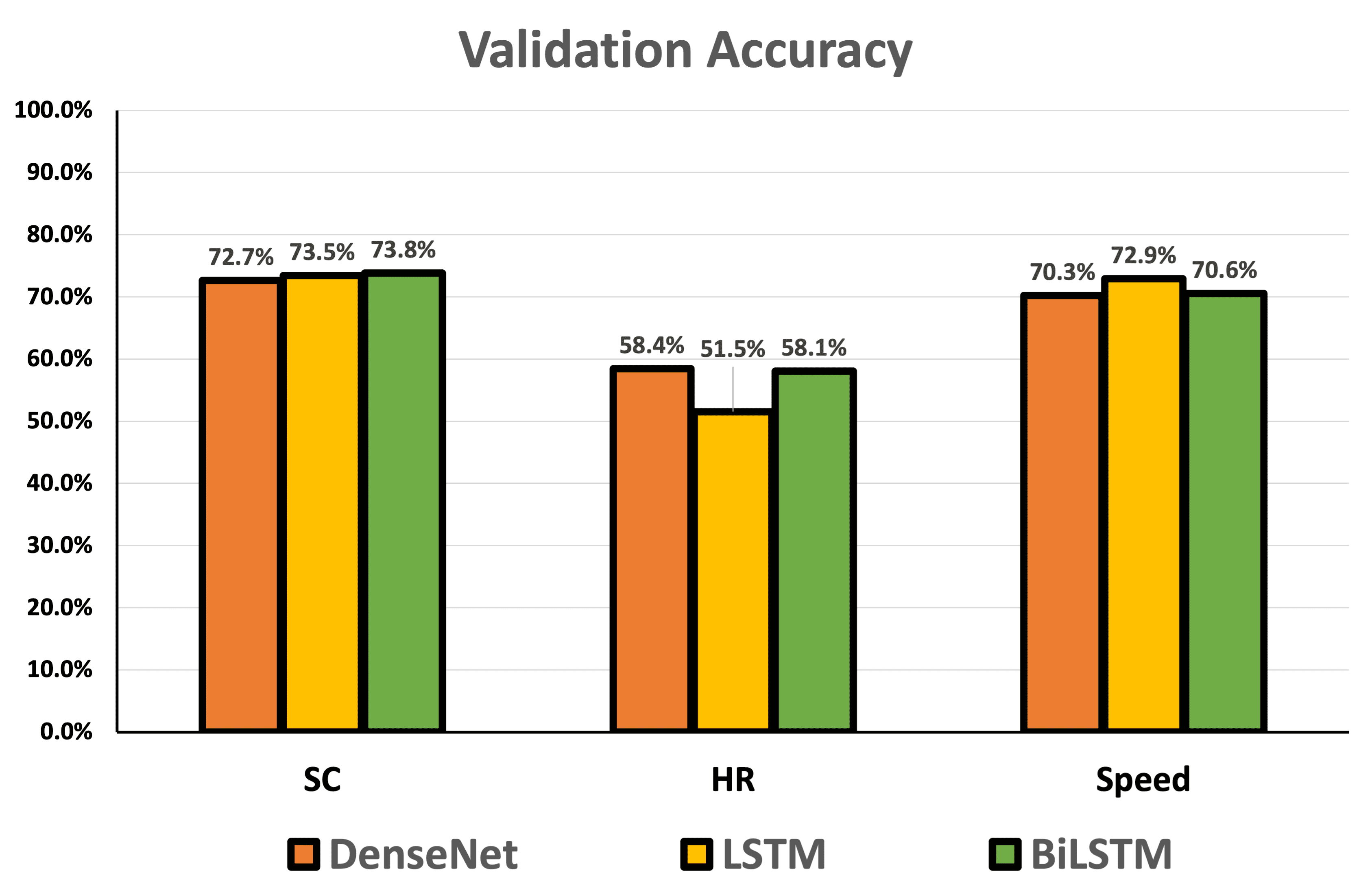

(1) Comparisons between Different Neural Network Models

The results of training and validation accuracy, in terms of DenseNet, LSTM and BiLSTM are displayed as follows.

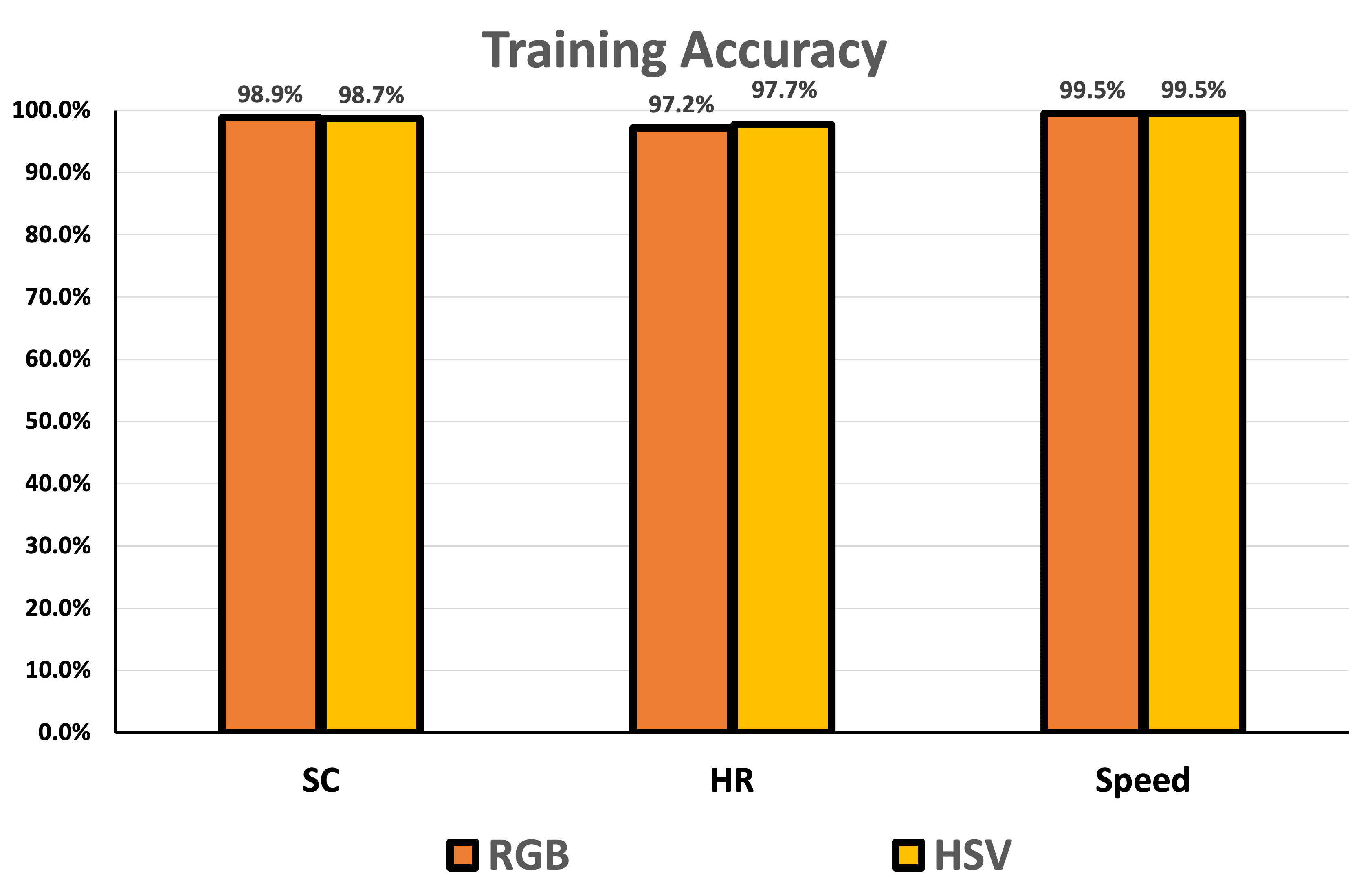

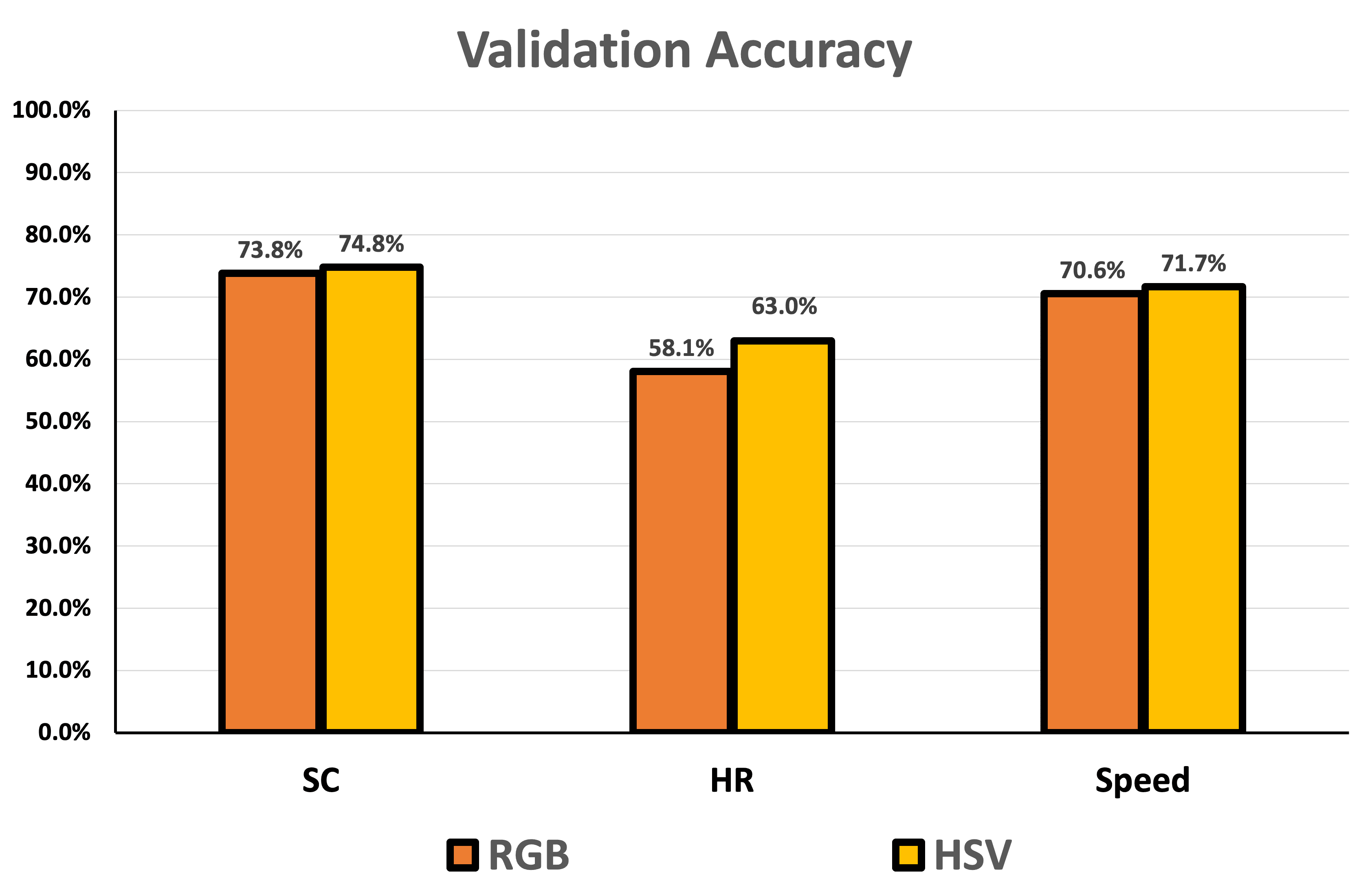

(2) Comparisons between Different Color Models

The results of training and validation accuracy, in terms of RGB and HSV are displayed as follows.

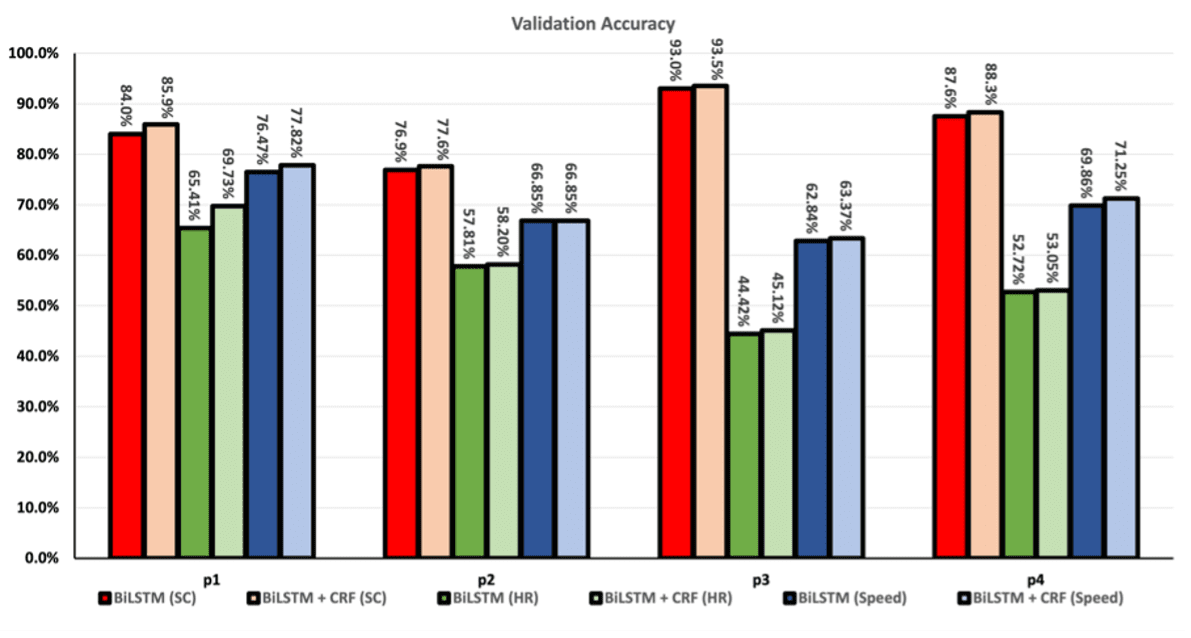

(3) Comparisons between Models with or without CRF

The results of the comparative validation accuracy of BiLSTM w/ and w/out CRF support for four different drivers are displayed as follows.

Reference

Zeyu Xiong, Jiahao Wang, Wangkai Jin, Junyu Liu, Yicun Duan, Zilin Song, and Xiangjun Peng. 2021. Face2Statistics: User-Friendly, Low-Cost and Effective Alternative to In-Vehicle Sensors/Monitors for Drivers. In Proceedings of the 24th International Conference on Human-Computer Interactions (HCI’22).